Running OpenAI's gpt-oss on Mac Mini with MLX

OpenAI dropped their open source, Apache-licensed “gpt-oss” models yesterday, which obviously is getting a lot of attention.

I’ve experimented with local models over the years, mainly to assess “how smart is it?” and “how badly does it eat up local resources?” Generally they weren’t really something I’d use in anger when I had access to much more powerful OpenAI and Anthropic models.

I hadn’t done a local LLM install for quite a while though, not since I got my M4 Mac Mini with 64GB of unified CPU/GPU memory … and gpt-oss is a full reasoning model, like OpenAI’s o3, so it seemed like the time was right to do another local model eval.

Several wows here:

- Wow—it is so easy to get local models running these days. LM Studio is amazing.

- Wow—the “local models” community is incredible. Within 24 hours, someone had a fully-optimized-for-Mac version of gpt-oss (using Apple’s MLX framework) was ready and waiting for download in LM Studio.

- Wow—gpt-oss is a for-real powerful model, not a toy like I’d experimented with a year or two back.

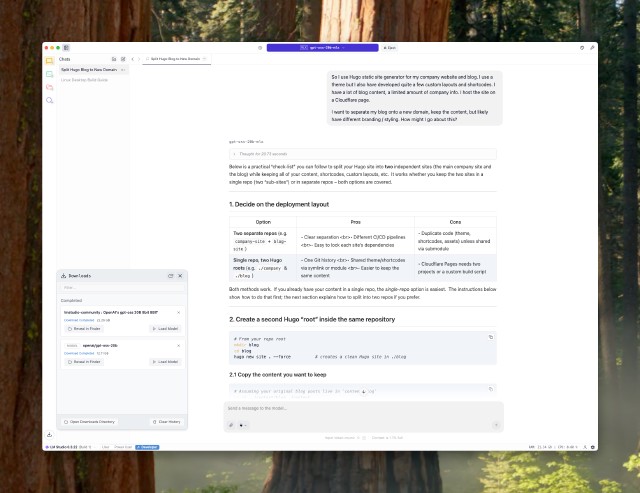

I had a few research questions floating around that I hadn’t had a chance to ask o3 about yet, so just for grins I decided to throw them at gpt-oss. I got a strong, useful, reasoning-grade responses, such as this complex topic relating to the Hugo static site generator:

Verdict: good enough to actually use.