(Note: See this update for a possible workaround.)

In a conversation with a venture partner yesterday, I was talking up the value that ChatGPT can bring to knowledge workers. He responded that their firm had a policy against putting any confidential data into ChatGPT. My reaction was, that applies to the free version and / or the old days, that with the advent of paid subscriptions, OpenAI no longer trained their models using inputs from subscribers.

But as I was digging around my ChatGPT+ settings just now, I made an unpleasant discovery, one that reminded me of a scene from the first Independence Day movie involving the sleazy Chief of Staff Nimziki:

MOISHE

Don't tell him to shut up! You'd

all be dead, were it not for my

David. You didn't do anything to

prevent this!

As everyone is about to besiege Moishe, the President tries to

calm him down.

PRESIDENT

Sir, there wasn't much more we

could have done. We were totally

unprepared for this.

MOISHE

Don't give me unprepared! Since

nineteen fifty whatever you guys

have had that space ship, the

thing you found in New Mexico.

DAVID

(embarrassed)

Dad, please...

MOISHE

What was it, Roswell? You had the

space ship, the bodies, everything

locked up in a bunker, the what is

it, Area fifty one. That's it!

Area fifty one. You knew and you

didn't do nothing!

For the first time in a long time, President Whitmore smiles.

PRESIDENT

Regardless of what the tabloids

have said, there were never any

spacecraft recovered by the

government. Take my word for it,

there is no Area 51 and no

recovered space ship.

Chief of Staff Nimziki suddenly clears his throat.

NIMZIKI

Uh, excuse me, Mr. President, but

that's not entirely accurate.

Here’s my imagined script from the OpenAI movie featuring character Sam Altman:

For the first time in a long time, the ChatGPT+ user smiles.

CHATGPT+ SUBSCRIBER

Regardless of what the tabloids

have said, OpenAI doesn't train

using its paid subscribers' chats.

Take my word for it, my chats

aren't ending up in OpenAI's model.

Chief of Venture Altman suddenly clears his throat.

ALTMAN

Uh, excuse me, Mr. Subscriber, but

that's not entirely accurate.

OpenAI’s weasel move? Technically, ChatGPT+ does allow subscribers to turn off train-the-model-using-my-data. But only if the subscriber turns off one of ChatGPT+’s most valuable features, Chat history, which gives total recall of all past chats, allowing you to continue the right conversation right where you left off.

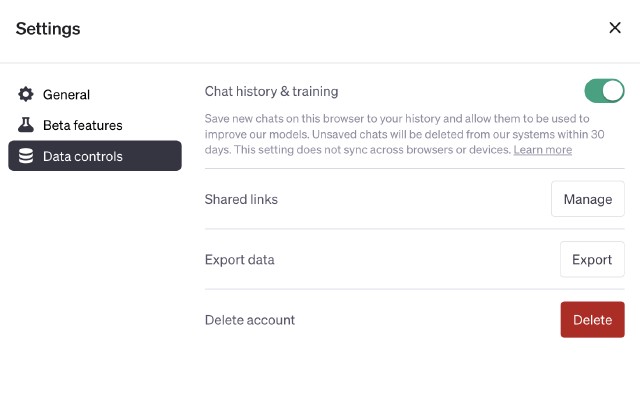

So sure, technically you can preserve privacy. All you have to do is turn off a feature which I’d argue is ChatGPT+’s most valuable addition to basic chatting. Here’s the Settings dialog:

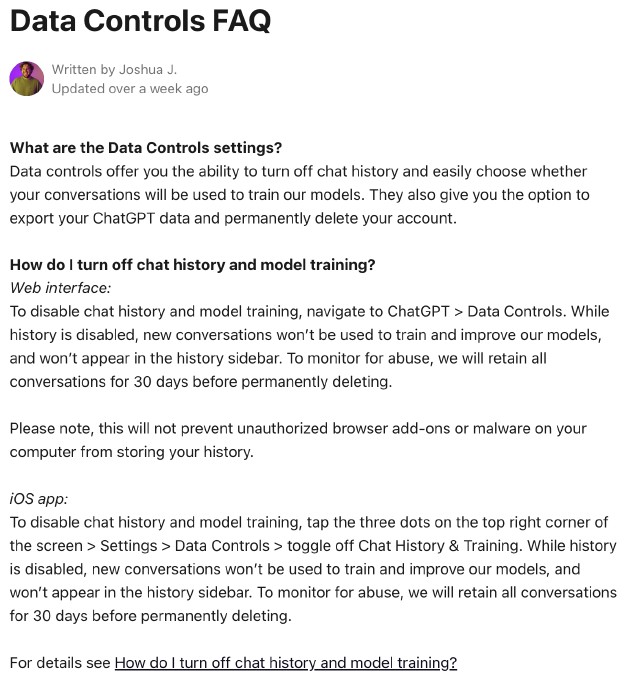

Here’s the More Information page:

Despite OpenAI’s words that make it seem like “we had to do this!”, there’s absolutely no technical justification. This is not a feature that requires model training. This is just OpenAI preserving the ability to say “Subscribers can easily turn that off!” while guaranteeing that most won’t.

Shame on you, OpenAI. And by the way, you’re shooting yourself in the foot. What a great incentive to find an alternative that doesn’t force this ridiculous choice on their paying customers. I’m already mulling workarounds.